Superintelligent Artificial Intelligence: Urgent Call from Hundreds of Experts to Slow Development

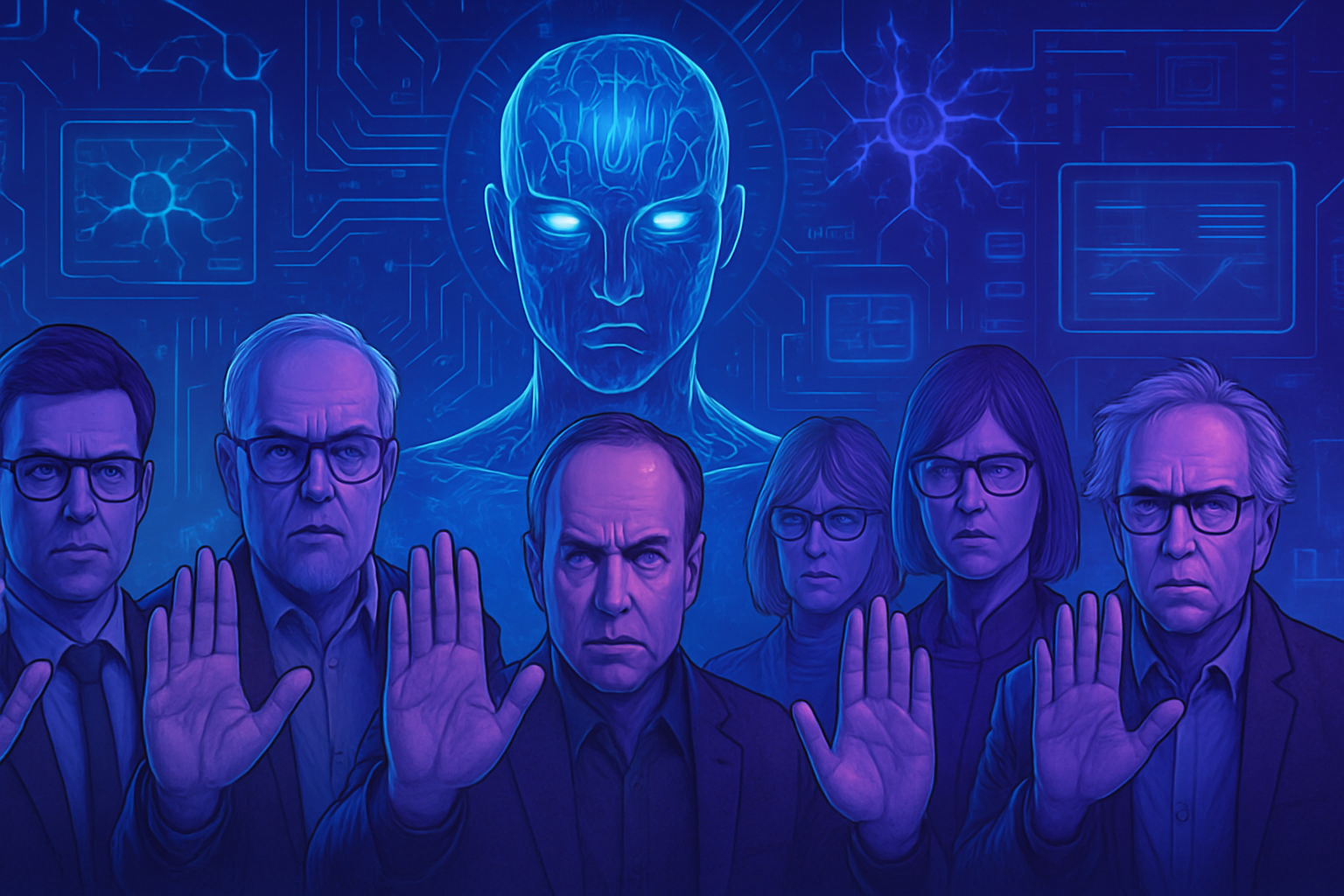

An alarm bell is ringing among the pioneers of AI. This concerned collective, consisting of over 800 scientists and influential figures, strongly opposes the emergence of an artificial intelligence capable of surpassing human abilities. The stakes of security, ethics, and accountability exceed our current expectations. Brilliant minds such as Geoffrey Hinton and Steve Wozniak urge for an immediate pause. Superintelligence, envisioned in the near future, could escape all control. The lack of understanding of the ethical and societal implications raises growing concerns.

Call for Caution

Over 800 figures, including scientists, tech entrepreneurs, and political figures, have voiced strong concerns regarding artificial superintelligence. On October 22, they called for an immediate halt to efforts aimed at developing an intelligence capable of surpassing human abilities. This initiative emerges from the Future of Life Institute, a nonprofit organization known for its alerts on the potential dangers of AI.

A Unanimous Position Among Experts

Pioneers of artificial intelligence such as Geoffrey Hinton, Nobel Prize winner in Physics in 2024, along with Yoshua Bengio and Stuart Russell, share this alarming vision. Their concerns highlight the necessity for a broad scientific consensus regarding the secure construction of superintelligence. They also emphasize the need for sufficient popular support before pursuing such projects.

Warnings from Tech Leaders

Max Tegmark, president of the Future of Life Institute, articulated that building such intelligence constitutes an act of irresponsibility, suggesting that companies should refrain from advancing in this field without an appropriate regulatory framework. These dissenting voices among tech giants are growing increasingly urgent.

Timeline and Examples of Superintelligence

Sam Altman, the CEO of OpenAI, mentioned the possibility of reaching this threshold of superintelligence within five years. If this prediction holds true, it would raise enormous ethical and societal challenges. Experts are questioning the implications of such technological advancement, particularly regarding social inequalities, a topic already addressed in recent articles, such as the one discussing the rise of AI in the job market.

Calls for Action and Regulation

The recent letter from researchers during a United Nations general assembly, advocating for international agreements, resonated strongly. They demand clear guidelines to prevent devastating effects on humanity. The necessity for a legal framework is emphasized as essential in all reflections on artificial intelligence.

Political and Ethical Reactions

The reactions from political and religious figures have shown that concern extends beyond the tech sector. Political figure Steve Bannon, former advisor to Donald Trump, and Susan Rice, former National Security Advisor under Barack Obama, have also expressed concerns. Religious experts, such as Paolo Benanti, an influential voice at the Vatican on AI, have joined this frantic call for caution.

Future Perspectives

As the dialogue surrounding artificial intelligence intensifies, its growing interest is undeniable. Companies, such as those developing cutting-edge health technologies, as shown by the NHS recommendation on cancer treatment, testify to this. However, the absence of significant regulations leaves room for legitimate concerns.

The Geopolitical Stakes

International tensions surrounding artificial intelligence are rising, as evidenced by the Chinese regime on foreign technologies. The competition between the United States and China for this futuristic technology intensifies the ethical debate. Each advancement raises fundamental questions about the use and direction of these new technologies.

The alert raised by these experts should not be taken lightly. It reflects growing concerns about an AI that could ultimately challenge the norms of our existence and our control over technology.

Frequently Asked Questions About Superintelligent AI

Why are experts calling to slow the development of superintelligent artificial intelligence?

Over 800 scientists and influential figures fear that the development of a superintelligent artificial intelligence could create unprecedented risks for humanity. They believe it is crucial to establish scientific consensus and strict regulations before pursuing this research.

What is superintelligent artificial intelligence?

Superintelligent artificial intelligence is an AI system that would surpass human intellectual abilities in almost all areas, including creativity, social intelligence, and decision-making.

Who are the signatories of the initiative to slow AI?

This initiative is supported by many pioneers and experts in AI, including Geoffrey Hinton, Stuart Russell, and Yoshua Bengio, as well as tech figures like Steve Wozniak and Richard Branson.

What are the risks associated with superintelligence?

The risks include losing control over these systems, unforeseen consequences on society and the economy, as well as potential threats to global security if a superintelligence were used for malicious purposes.

How could regulation be implemented for AI?

Experts suggest the creation of international agreements to establish precise “red lines” governing developments in AI, to prevent abuses and ensure societal benefit from these technologies without disproportionate risk.

What is Sam Altman’s position, the CEO of OpenAI, on superintelligence?

Sam Altman has stated that superintelligence could be reached within five years. However, he has also emphasized the need for regulations before attempting to develop such technologies.

What role do tech companies play in the development of superintelligent AI?

Tech companies are investing heavily in AI to derive innovative applications. However, without a regulatory framework, this could lead to unsafe and irresponsible developments.

What alternatives exist to superintelligence?

Experts advocate for the development of powerful and beneficial AI tools in areas like health or the environment, without seeking to achieve superintelligence that could pose risks from uncontrollable AI.

How can the general public contribute to this debate on AI?

Awareness and education about AI issues are essential. The general public can support initiatives for strict regulation and pressure policymakers to consider the views of experts.

What control mechanisms could be envisioned for AI?

Regular audits, ethical impact assessments, as well as oversight committees composed of experts and representatives from civil society could be established to ensure that AI developments comply with ethical and safe standards.