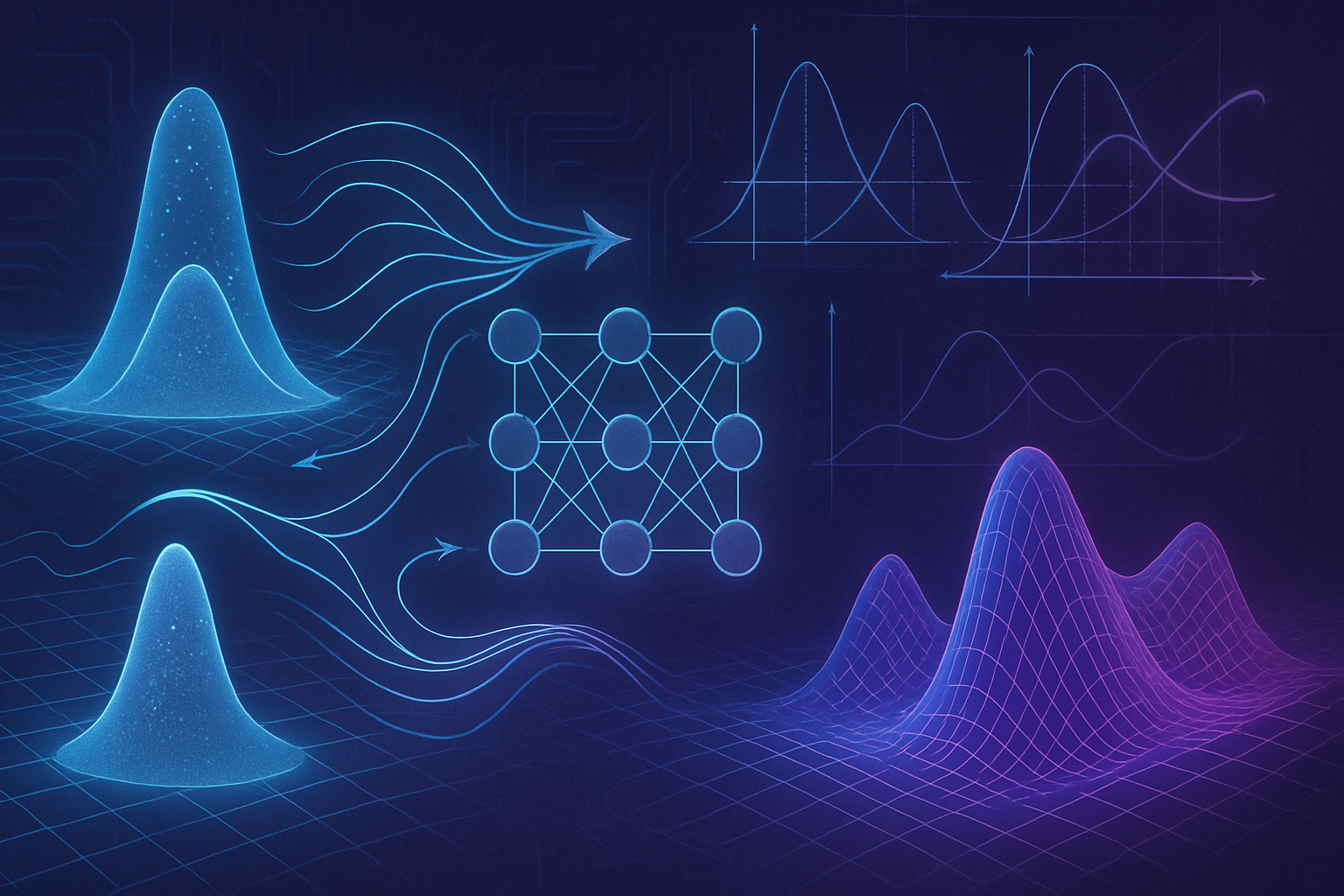

A fascinating synergy emerges between thermodynamics and machine learning. Generative models, based on diffusion dynamics, require constant optimization to meet the increasing demands for accuracy and efficiency. Optimal transport theory proves to be an essential key to understanding these complex processes. Researchers are redefining the paradigms of machine learning by integrating principles of non-equilibrium thermodynamics, thus offering an innovative approach. In this quest for robustness and *accuracy*, theoretical orientation reveals an unprecedented depth of analysis, making generative models not only more efficient but also intelligible. The interaction between these disciplines opens up new perspectives on information processing, both biological and artificial.

A thermodynamic approach in machine learning

A study conducted by researchers at the University of Tokyo, led by Sosuke Ito, revealed a deep link between non-equilibrium thermodynamics and optimal transport theory. This research shows how these scientific concepts can enhance generative models in machine learning. Although non-equilibrium thermodynamics deals with dynamically evolving systems, its relevance in the field of machine learning has not been fully exploited until now.

Diffusion models and their functioning

Diffusion models, which fuel generative image algorithms, have made remarkable advancements. They introduce noise into the original data, necessary for the training process. Through diffusion dynamics, the model assesses how to eliminate this noise during the generation of new data. It is by orchestrating this dynamics in a reverse temporal order that the quality of the generated content is optimized.

The choice of diffusion dynamics, often referred to as the noise plan, embodies a persistent controversy in this field. Previous works have indicated that optimal transport dynamics provide an empirical contribution. However, no theoretical demonstration had yet succeeded in establishing their effectiveness. Current research addresses this gap by offering a solid foundation for the theory.

Thermodynamic relations and robustness of data generation

Researchers have developed inequalities that establish a relationship between thermodynamic dissipation and estimation error during data generation. Thanks to recent advancements in thermodynamic compensation relations, they have been able to demonstrate how these inequalities help structure the robustness of data generated by diffusion models. This approach offers an innovative perspective to define optimal protocols in the development of generative models.

Academic contributions and future perspective

The project has also highlighted the role of undergraduate students, such as Kotaro Ikeda, in the quest for innovation. The research, partially conducted within the framework of a university course, demonstrates the commitment and skills of young researchers. This type of involvement fosters an enriching collaborative learning model, essential for the next generation of scientists.

Researchers hope that their work will highlight the importance of non-equilibrium thermodynamics in the machine learning community. This could encourage new explorations of its utility in understanding biological and artificial information processing. The implementation of these theoretical concepts could revolutionize the generation of generative models.

Other applications and advancements in the field

The research also fits into a broader trend, where principles of physics are integrated into advances in artificial intelligence. Recent papers discuss how similar approaches can accelerate and improve applications in artificial intelligence, fostering more precise outcomes and more efficient processes. Physics continues to play a vital role in the evolution of AI, strengthening it through innovative approaches.

For a broader perspective on recent advancements in the field of artificial intelligence and neural networks, one can refer to the works of pioneers such as Geoffrey Hinton and John Hopfield, who were recently awarded the Nobel Prize in Physics. Their contributions enrich the current research landscape, highlighting the synergies between physics and AI.

Staying informed about publications in reputable journals like Physical Review X is crucial to understanding how these concepts unfold in concrete applications, potentially leading to technological revolutions. For a more detailed analysis of these relationships between thermodynamics and machine learning, several articles and reports are available, such as those on major advancements in the field of artificial neural networks.

Frequently asked questions

What is the importance of non-equilibrium thermodynamics in machine learning?

Non-equilibrium thermodynamics helps to understand systems in constant evolution, which is crucial for improving the performance of generative models in machine learning.

How does optimal transport theory apply to diffusion models?

Optimal transport theory provides a mathematical framework for optimizing the change of data distribution in diffusion models, ensuring reduced cost when generating new data.

What are the advantages of diffusion models in image generation?

Diffusion models allow for the generation of high-quality images by eliminating noise from the original data while relying on reverse time dynamics to refine content creation.

How do inequalities between thermodynamic dissipation and data generation enhance the robustness of models?

The established inequalities show that optimal transport dynamics ensure better robustness in data generation, meaning that generators are more reliable and efficient in real-world scenarios.

What role do students play in research on thermodynamics and generative models?

Students, like those who contributed to this research, bring new perspectives and techniques, thus enriching the field while developing their skills in scientific research.

How is the application of non-equilibrium thermodynamics still underutilized in machine learning?

Although promising, non-equilibrium thermodynamics has not yet been fully exploited in the development of generative models, leaving room for more innovations and practical applications.

What are the current challenges in selecting diffusion dynamics in models?

The selection of diffusion dynamics, or noise program, remains a subject of debate, as there is no theoretical consensus on what works best in various data generation contexts.

How can the results of this study influence future research in machine learning?

The results emphasize the importance of exploring new theories, such as non-equilibrium thermodynamics, to guide the development of more effective techniques in image generation and other applications.